AI in Journalism: What Actually Works (And What Doesn't)

Real-world lessons from the newsroom trenches on extending capacity without losing judgment

Everyone's talking about AI in journalism. My take? It's not about if, but how.

The way I see it, AI should help newsrooms expand what they can do, not replace how they think. Let it handle the content you wouldn't have covered anyway, patch gaps where you lost staff, and try new things without breaking the bank.

When I was at Lehigh Valley Public Media, we experimented with AI in a few basic ways. First, we teamed up with United Robots, a Swedish company founded in 2015. From their website:

"We've created our own, unique Content-as-a-Service product based on data science, AI and NLG (Natural Language Generation). A giant content factory, delivering thousands of pieces of automated texts, images, graphics, maps, metadata and more to our clients every single day."

Now, before you brace for the robot overlords reporting local news, hear me out. We worked with United Robots in two focus areas, both of which we wouldn't have wanted our paid professional staff touching. This also allowed us to expand our offering without trying to convince our board of directors to fund another reporting position—which, let's be real, wasn't going to happen.

Coverage Expansion Without Headcount

The first focus was traffic reports. It's exactly what it sounds like: they provided a feed into our CMS of road incidents throughout our coverage area, updated from TomTom and refreshed automatically. Each article included a Google Maps satellite image to show the location of the incident.

The other was residential real estate content. United Robots tied into the MLS system and provided us with articles on all the real estate transactions in our coverage area. Beyond just articles on which home sold and when, their system generated top 10 lists, like "10 Most Expensive Homes." This content allowed us to stand up a real estate vertical—a top request from our readers and our sales team.

For both content channels, we chose direct publishing, meaning no staff touched the content before publication. All content was prominently identified as having been produced by a bot, and an email address was included for readers to provide corrections or share concerns and questions.

Augmenting, Not Replacing

The other place we experimented was with Tansa, a company that's been around since 1995, focusing on "advanced text editing tools for professional publishers."

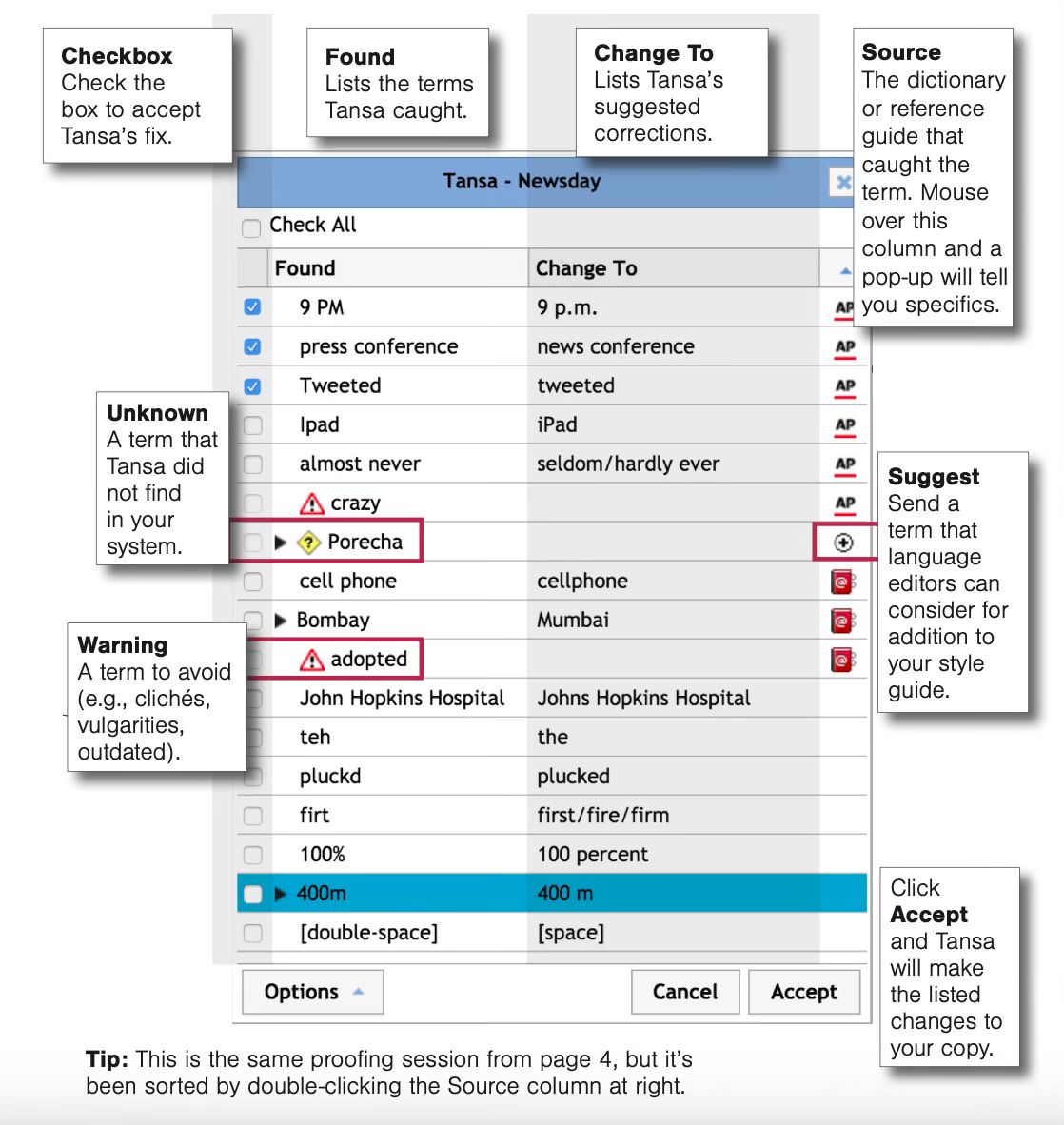

We used their Generative AI products to generate SEO headlines, summarize articles, and identify keywords. This allowed our team to work smarter and more efficiently. We also used their copy-editing software. And the best part? They seamlessly integrated with our style guide.

I think the staff generally had a positive experience with both tools. Yes, United Robots created some initial anxiety as reporters worried we would use the technology to replace their jobs. But once we made it clear that our intention was only to use it for content we didn't want them spending time on, they relaxed. Since we were a small operation without a copy desk, they especially appreciated the toolset Tansa provided.

Ultimately, staff buy-in came from clear boundaries and transparent internal communication, not just the technology itself.

Three Key Takeaways

Fear comes from unclear intentions. Our staff was rightfully fearful when they first heard we'd be working with United Robots—worried we'd have AI reviewing council meeting agendas and creating articles. Once we talked through our intentions and the guardrails, they felt better about it. Cautious, but better.

Transparency can be a competitive advantage. At a time when trust in media is at a horrible low, being upfront about AI use matters. Many of us heard about the Philadelphia Inquirer and the Chicago Sun-Times getting embarrassed when AI-created special sections included fake content and quotes from fake people. That situation, I believe, was far worse because nowhere did they state they were experimenting with AI or how readers might see the results.

AI is a coverage expansion tool, not a replacement strategy. When news organizations are doing more with less, AI can help them continue providing public services.

Where Does AI Truly Fit?

AI can be an effective tool for research and source work, with the critical caveat that reporters and editors need to double-check that work. An argument can be made that the time it takes to verify what ChatGPT produces negates the time saved by using it. I'd argue it wasn't about saving time, but the depth of research it can provide. Even in traditional reporting, reporters are routinely told to check, check, double-check their work. What's that saying—if your mother says it's raining, ask for a second source?

I do not believe AI should automate journalism. I know what you're going to say: "But Yoni, you used Tansa to do copy editing!" Yes, we did, but I'd argue that was augmenting journalism. This isn't a concept unique to this moment of AI. As many organizations shifted from manual layout to digital and Content Management Systems became ubiquitous in newsrooms, the technology that came with them augmented those traditional processes.

Additionally, with Tansa, the reporter or editor was still involved. Changes—or more likely, recommended changes—happened under their watch and in many cases required their approval or denial. This human oversight allows the work to remain creative instead of mechanical. A newsroom is not a factory churning out widgets.

That said, I do not believe AI should replace common sense, local knowledge, or the traditional journalistic "gut feeling." AI won't be able to identify "Sue Williams," for example, who always sits on the porch outside her house and is an excellent source for what's happening in the neighborhood.

A Framework for Newsroom Leaders

For newsroom leaders wrestling with this, here's what I recommend:

Don't run from AI, embrace it, because it is not going away.

If you don't know or understand it, find someone who does. I'm sure they'd be happy to help.

Start looking at how you should update your policies, covering or related to AI. There's no need to recreate the wheel; look at organizations that have already done this and use their policies as a model.

Work with your team to identify areas of experimentation, and create clearly defined boundaries and expectations.

Overcommunicate internally. Don't allow rumor and innuendo to shape or impede your work with AI.

The future of journalism isn't about choosing between human judgment and artificial intelligence. It's about finding the right balance—using AI to expand what's possible while preserving what makes journalism essential: local knowledge, editorial judgment, and the ability to ask the right questions at the right time.

What's your newsroom's experience with AI? Have you found tools that work—or cautionary tales to share? Hit reply or comment below and let me know. I'm always interested in hearing how other news organizations are navigating this transition.

If this was helpful, please share it with other newsroom leaders who might be wrestling with these same questions. The more we can learn from each other's experiments, the better we'll all get at this.

It's great that solid journalism is the key thing. Newsrooms in Pakistan are adopting AI but the growth level in this aspect is slow and steady, which I feel is alright.

This was great Yoni!

Do you believe that people in newsroom won't be replaced by AI but with people who know how to use AI?